Biography

I am an ex-Neuroscientist now working on Artificial General Intelligence.

I’m working as a Staff Research Scientist at Google DeepMind, more precisely on Concepts understanding, structured representation learning and RL.

Basically worked a long while on unsupervised structure learning and generative models, leveraging them to make predictions and trying to make model-based Deep Reinforcement learning work like it should.

But like everybody, more recently I’m mostly working with assessing massive visual-language models for their common-sense abilities, like an ex-neuroscientist would 😃

- Machine Learning

- Unsupervised Structure Learning

- Deep Reinforcement Learning

- Computational Neuroscience

PhD in Computational Neuroscience and Machine Learning

UCL Gatsby Computational Neuroscience Unit

MSc in Computer Science / Biocomputing

Ecole Polytechnique Federale de Lausanne (EPFL)

Skills

Experience

Lead a variety of research efforts tackling several core AI problems.

Includes:

- Model-based RL leveraging structured generative models and Transformer-based world models.

- Episodic learning of object abstractions

- Video structured generative models and diffusion models

Responsibilities:

- Research & Tech lead and management (~10 Scientists/Engineers)

- Model building, training and optimization for large-scale distributed systems

- Analysis, presentation to core stakeholders.

- Integration, testing and debugging

Field defining research on object-based/structured generative models, and how to leverage them for learning better autonomous agents (e.g. graph neural networks).

- Research lead and core contributor (~3 Scientists/Engineers)

- Disentanglement research, environment development, benchmarks and advanced data collection.

- Deep Reinforcement Learning research (model-free, model-based, planning)

Core research on concepts and generative models, co-author on papers that started the disentanglement representation research sub-field.

- Main co-author on β-VAE (4400 citations), Understanding Disentangling, SCAN, DARLA, MONet, IODINE, and many others.

- Designed and released the dSprites dataset, among other core datasets used by the community.

Featured Publications

Building embodied AI systems that can follow arbitrary language instructions in any 3D environment is a key challenge for creating general AI. Accomplishing this goal requires learning to ground language in perception and embodied actions, in order to accomplish complex tasks. The Scalable, Instructable, Multiworld Agent (SIMA) project tackles this by training agents to follow free-form instructions across a diverse range of virtual 3D environments, including curated research environments as well as openended, commercial video games. Our goal is to develop an instructable agent that can accomplish anything a human can do in any simulated 3D environment. Our approach focuses on language-driven generality while imposing minimal assumptions. Our agents interact with environments in real-time using a generic, human-like interface: the inputs are image observations and language instructions and the outputs are keyboard-and-mouse actions. This general approach is challenging, but it allows agents to ground language across many visually complex and semantically rich environments while also allowing us to readily run agents in new environments. In this paper we describe our motivation and goal, the initial progress we have made, and promising preliminary results on several diverse research environments and a variety of commercial video games

To help agents reason about scenes in terms of their building blocks, we wish to extract the compositional structure of any given scene (in particular, the configuration and characteristics of objects comprising the scene). This problem is especially difficult when scene structure needs to be inferred while also estimating the agent’s location/viewpoint, as the two variables jointly give rise to the agent’s observations. We present an unsupervised variational approach to this problem. Leveraging the shared structure that exists across different scenes, our model learns to infer two sets of latent representations from RGB video input alone; a set of" object" latents, corresponding to the time-invariant, object-level contents of the scene, as well as a set of" frame" latents, corresponding to global time-varying elements such as viewpoint. This factorization of latents allows our model, SIMONe, to represent object attributes in an allocentric manner which does not depend on viewpoint. Moreover, it allows us to disentangle object dynamics and summarize their trajectories as time-abstracted, view-invariant, per-object properties. We demonstrate these capabilities, as well as the model’s performance in terms of view synthesis and instance segmentation, across three procedurally generated video datasets.

Data efficiency and robustness to task-irrelevant perturbations are long-standing challenges for deep reinforcement learning algorithms. Here we introduce a modular approach to addressing these challenges in a continuous control environment, without using hand-crafted or supervised information. Our Curious Object-Based seaRch Agent (COBRA) uses task-free intrinsically motivated exploration and unsupervised learning to build object-based models of its environment and action space. Subsequently, it can learn a variety of tasks through model-based search in very few steps and excel on structured hold-out tests of policy robustness.

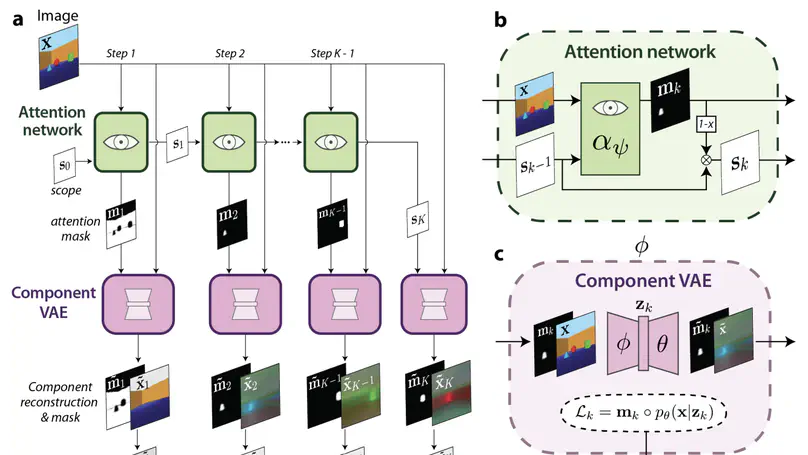

The ability to decompose scenes in terms of abstract building blocks is crucial for general intelligence. Where those basic building blocks share meaningful properties, interactions and other regularities across scenes, such decompositions can simplify reasoning and facilitate imagination of novel scenarios. In particular, representing perceptual observations in terms of entities should improve data efficiency and transfer performance on a wide range of tasks. Thus we need models capable of discovering useful decompositions of scenes by identifying units with such regularities and representing them in a common format. To address this problem, we have developed the Multi-Object Network (MONet). In this model, a VAE is trained end-to-end together with a recurrent attention network – in a purely unsupervised manner – to provide attention masks around, and reconstructions of, regions of images. We show that this model is capable of learning to decompose and represent challenging 3D scenes into semantically meaningful components, such as objects and background elements.

This paper describes SCAN (Symbol-Concept Association Network), a new framework for learning recombinable concepts in the visual domain. We first use the previously published beta-VAE (Higgins et al., 2017a) architecture to learn a disentangled representation of the latent structure of the visual world, before training SCAN to extract abstract concepts grounded in such disentangled visual primitives through fast symbol association.

Recent Publications

Contact

- loic@matthey.me

- London, UK

- Resume